Implementing Speech Emotion Recognition for Critical Market Insights

Client

Our client, a leading market research firm, wanted to gain deeper insights into consumer sentiments and preferences. They intended to implement a Speech Emotion Recognition (SER) system, enabling them to uncover valuable emotional insights and trends. Their objective was to analyze extensive audio data from customer surveys and focus groups, that could inform marketing strategies and product development decisions.

Challenges

- Build a Speech Emotion Recognition system that predicts the user’s emotion based on a spoken utterance.

- The system should achieve high accuracy in recognizing and classifying emotions from speech signals.

- The system should perform well across different emotions, avoiding biases towards specific emotions.

- The system should consider the context of the conversation, considering previous utterances or dialogues for refined emotional understanding.

Approach

-

- Collected four diverse datasets:

- Ryerson Audio-Visual Database of Emotional Speech and Song (RAVDESS),

- Crowd Sourced Emotional Multimodal Actors Dataset (CREMA-D),

- Surrey Audio-Visual Expressed Emotion (SAVEE), and

- Toronto Emotional Speech Set (TESS).

- Split the dataset into training, validation, and test sets.

- Augmented the data by introducing random pitch shifting, time stretching, and background noise addition; to create a more balanced class distribution.

- Extracted Mel-Frequency Cepstral Coefficients (MFCC) features from the audio dataset.

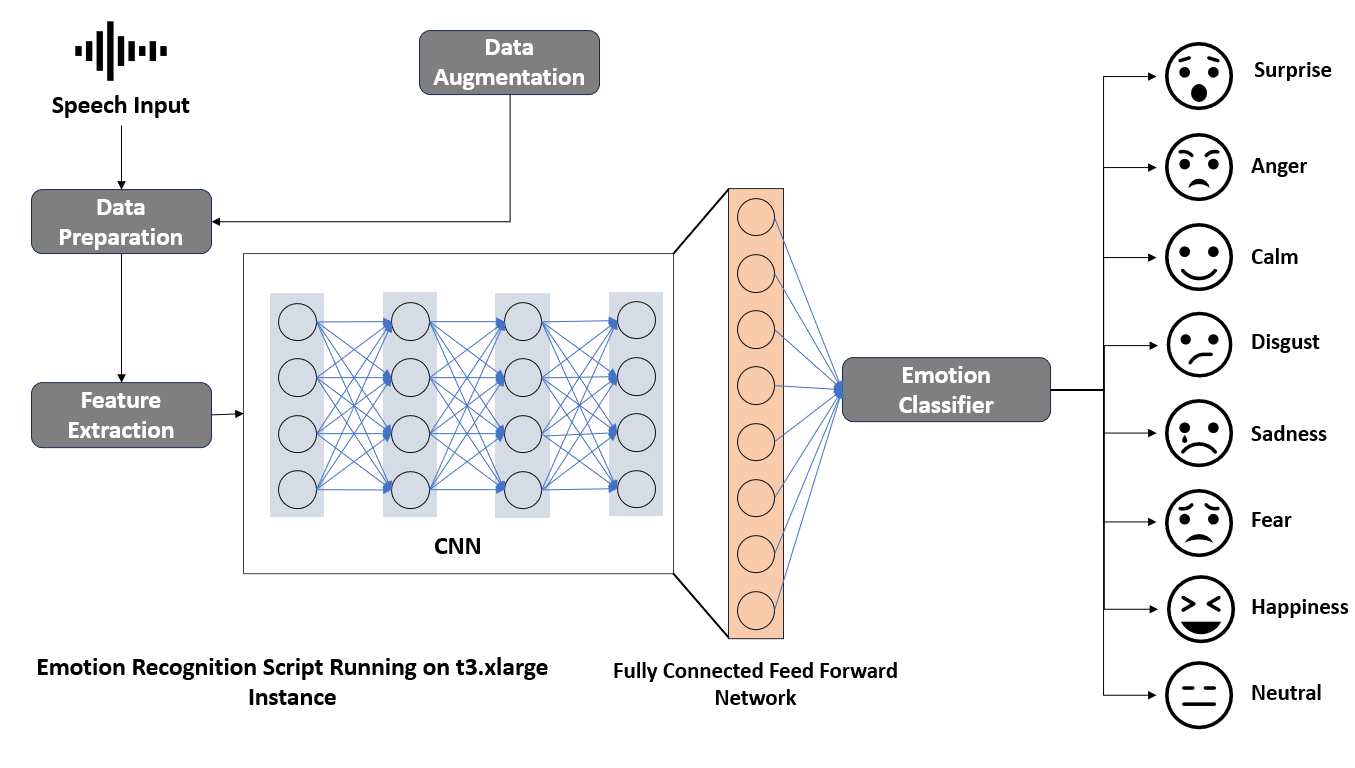

- Designed a deep learning model architecture including:

- 4 Convolutional layers alternated with max-pooling layers

- 2 Dropout layers to mitigate overfitting

- A dense, fully connected network with a softmax activation function

- Configured the model with Adam optimizer and categorical cross-entropy loss.

- Trained and tested the model using the test dataset, on NVIDIA A10 GPU.

- The model is evaluated against accuracy, classification report, and confusion matrix to assess model performance.

- Categorized emotions: Surprise, Angry, Calm, Disgust, Sad, Fear, Happy, and Neutral.

- Collected four diverse datasets:

Impact

- Achieved an overall accuracy of 85%, demonstrating the system’s proficiency in correctly classifying emotional states.

- 23% increase in decision-making accuracy based on emotional insights, leading to more informed strategies.

- Identified previously overlooked emotional trends, leading to an 18% improvement in customer understanding.

- 15% increase in campaign effectiveness and customer engagement due to emotionally resonant messaging.